Statistical Concepts For Data Wizards will be described in this article. In the world of data science, there is a secret language that benefits those who understand it. Do you want to know what makes a data expert efficient? It’s having a profound understanding of the data. Unfortunately, you can’t have a friendly conversation with the data, but don’t worry, we have the next best solution.

Top 10 Statistical Concepts For Data Wizards In 2024

In this article, you can know about Statistical Concepts For Data Wizards here are the details below;

Here are the top ten statistical concepts that you must have in your arsenal. Whether you’re a budding data scientist, a seasoned professional, or merely intrigued by the inner workings of data-driven decision-making, prepare for an enthralling exploration of the statistical principles that underpin the world of data science.

1. Descriptive statistics:

Starting with the most fundamental and essential statistical concept, descriptive statistics. Descriptive statistics are the specific methods and measures that describe the data. It’s like the foundation of your building. It is a sturdy groundwork upon which further analysis can be constructed. Descriptive statistics can be broken down into measures of central tendency and measures of variability.

· Measure of Central Tendency:

Central Tendency is defined as “the number used to represent the center or middle of a set of data values”. It is a single value that is typically representative of the whole data. They help us understand where the “average” or “central” point lies amidst a collection of data points.

There are a few techniques to find the central tendency of the data, namely “Mean” (average), “Median” (middle value when data is sorted), and “Mode” (most frequently occurring values).

· Measures of variability:

Measures of variability describe the spread, dispersion, and deviation of the data. In essence, they tell us how much each value point deviates from the central tendency. A few measures of variability are “Range”, “Variance”, “Standard Deviation”, and “Quartile Range”. These provide valuable insights into the degree of variability or uniformity in the data.

2. Inferential statistics:

Inferential statistics enable us to draw judgments about the population from a sample of the population. Imagine having to decide whether a medicinal drug is good or bad for the general public. It is practically impossible to test it on every single member of the population.

This is where inferential statistics comes in handy. Inferential statistics employ techniques such as hypothesis testing and regression analysis (also discussed later) to determine the likelihood of observed patterns occurring by chance and to estimate population parameters.

This invaluable tool empowers data scientists and researchers to go beyond descriptive analysis and uncover deeper insights, allowing them to make data-driven conclusions and formulate hypotheses about the broader context from which the data was sampled.

3. Probability distributions:

Probability distributions serve as foundational concepts in statistics and mathematics, providing a structured framework for characterizing the probabilities of various outcomes in random events. These distributions, including well-known ones like the normal, binomial, and

Poisson distributions offer structured representations for understanding how data is distributed across different values or occurrences.

Much like navigational charts guiding explorers through uncharted territory, probability distributions function as reliable guides through the landscape of uncertainty, enabling us to quantitatively assess the likelihood of specific events.

They constitute essential tools for statistical analysis, hypothesis testing, and predictive modeling, furnishing a systematic approach to evaluate, analyze, and make informed decisions in scenarios involving randomness and unpredictability. Comprehension of probability distributions is imperative for effectively modeling and interpreting real-world data and facilitating accurate predictions.

4. Sampling methods:

We now know inferential statistics help us make conclusions about the population from a sample of the population. How do we ensure that the sample is representative of the population? This is where sampling methods come to aid us.

Sampling methods are a set of methods that help us pick our sample set out of the population. Sampling methods are indispensable in surveys, experiments, and observational studies, ensuring that our conclusions are both efficient and statistically valid. There are many types of sampling methods. Some of the most common ones are defined below. Also check Wireshark alternatives

Simple Random Sampling: A method where each member of the residents has an equal chance of being selected for the sample, typically through random processes.

- Stratified Sampling: The inhabitants is divided into subgroups (strata), & a random sample is taken from each stratum in proportion to its size.

- Systematic Sampling: Selecting every “kth” element from a population list, using a systematic approach to create the sample.

- Cluster Sampling: The inhabitants is divided into clusters, & a random sample of clusters is set, with all members in selected clusters included.

- Convenience Sampling: Selection of individuals/items based on convenience or availability, often leading to non-representative samples.

- Purposive (Judgmental) Sampling: Researchers deliberately select specific individuals/items based on their expertise or judgment, potentially introducing bias.

- Quota Sampling: The people is divided into subgroups, and individuals are purposively selected from each subgroup to meet predetermined quotas.

- Snowball Sampling: Used in hard-to-reach populations, where participants refer researchers to others, leading to an expanding sample.

5. Regression analysis:

Regression research is a statistical approach that helps us quantify the relationship between a dependent variable and one or more independent variables. It’s like drawing a line through data points to understand and predict how changes in one variable relate to changes in another.

Regression measures, such as linear regression or logistic regression, are used to uncover patterns and causal relationships in diverse fields like economics, healthcare, and social sciences. This technique empowers researchers to make predictions, analyze cause-and-effect connections, and gain insights into complex phenomena.

6. Hypothesis testing:

Hypothesis testing is a key statistical method used to assess claims or hypotheses about a population using sample data. It’s like a process of weighing evidence to determine if there’s enough proof to support a hypothesis.

Researchers formulate a null hypothesis and an alternative hypothesis, then use statistical tests to evaluate whether the data supports rejecting the null hypothesis in favor of the alternative.

This method is crucial for making informed decisions, drawing meaningful conclusions, and assessing the significance of observed effects in various fields of research and decision-making.

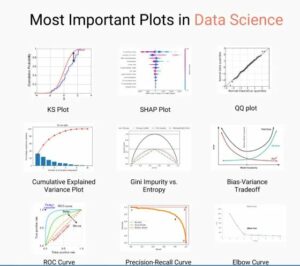

7. Data visualizations:

Data visualization is the art and science of representing complex data in a visual and comprehensible form. It’s like translating the language of numbers and statistics into a graphical story that anyone can understand at a glance.

Effective data visualization not only makes data more accessible but also allows us to spot trends, patterns, and outliers, making it an essential tool for data analysis and decision-making. Whether through charts, graphs, maps, or interactive dashboards, data visualization empowers us to convey insights, share information, and gain a deeper understanding of complex datasets.

8. ANOVA (Analysis of variance):

Calculation of Variance (ANOVA) is a statistical procedure utilized to compare the means of two or more groups to resolve if there are significant differences among them. It’s like the referee in a sports tournament, checking if there’s enough evidence to conclude that the teams’ performances are different. Also check Yepic Alternative

ANOVA calculates a test statistic and a p-value, which indicates whether the observed differences in means are statistically significant or likely occurred by chance.

This method is widely used in research and experimental studies, allowing researchers to assess the impact of different factors or treatments on a dependent variable and draw meaningful conclusions about group differences. ANOVA is a powerful tool for hypothesis testing a& plays a vital role in various fields, from medicine and psychology to economics and engineering.

9. Time Series analysis:

Time series analysis is a specialized field of statistics and data science that focuses on studying data points collected, recorded, or measured over time. It’s like examining the historical trajectory of a variable to understand its patterns and trends.

Time series analysis involves techniques for data visualization, smoothing, forecasting, and modeling to uncover insights and make predictions about future values.

This discipline finds applications in various domains, from finance and economics to climate science and stock market predictions, helping analysts and researchers understand and harness the temporal patterns within their data.

10. Bayesian statistics:

Bayesian statistics is a department of statistics that takes a unique approach to probability and inference. Unlike classical statistics, which use fixed parameters, Bayesian statistics treat probability as a measure of uncertainty, updating beliefs based on prior information and new evidence.